It is December again. Another wonderful year is coming to an end. It’s been a long time since I posted my last blog here. Was extremely busy with my job at Oracle. Even though I was very busy I managed to do some good work in Deep learning.

Courses

In the last 5 months, I completed some great courses from Coursera and Youtube on Deep learning.

Deeplearning.ai is one of the courses I did in the last 5 months. This is a package of 5 courses starting from Basics of the Neural net to Advanced concepts like CNN, RNN, GRU, LSTM etc. It costs about 3000Rs per month for subscribing to the course and will take nearly 3-4 months to complete. Working through this course I am able to understand and implement most of the latest concepts in Deep learning. This is an extremely recommended course for everyone who is interested to learn advanced concepts.

Deep learning Frameworks

I am able to work on some deep learning frameworks including TensorFlow, PyTorch and Keras. I also did a course in datacamp to learn more about Numpy.

Kaggle

I finally started to participate in Kaggle competitions. I heard about kaggle for the first time about 2 years back. I recently participated in the PUBG kernel competition to predict the chance of a player to win the game. Kaggle is more than just participating in competitions. There is a section known as Kaggle learn where there are free tutorials that teach from the basics of machine learning to Advanced concepts like CNN implementation. Many people share their Kernel which has extremely valuable information relating to the techniques, algorithms,implementation etc.

Learn Maths

I started to relearn the mathematical concepts like Probability ,Linear algebra and Vector calculus. I am currently going through my old college textbooks. Knowing these concepts really helps in understanding research papers with some heavy math.

Code

I have also worked on some code for ML algorithms that I learned and implemented. I have uploaded some of them in my GitHub repo

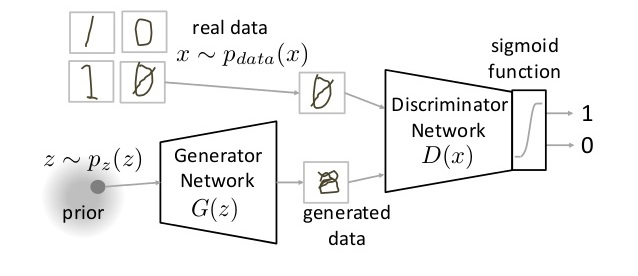

The following is an interview video with an ML PhD student. I particularly liked this because of the quality of discussion on GAN.So I am putting it here.